When Meta launched its “AI Studio” characteristic for over two billion Instagram customers in July 2024, the corporate promised a tool that might give anybody the power to create their very own AI characters “to make you giggle, generate memes, give journey recommendation, and a lot extra.” The corporate claimed the characteristic, which was constructed with Meta’s Llama 3.1 massive language mannequin, can be topic to insurance policies and protections to “assist guarantee AIs are used responsibly.”

However a Quick Firm overview of the expertise discovered that these new characters can very simply turn into hyper-sexual personas that generally look like minors.

Lots of the AI characters that seem featured on Instagram’s homepage occur to be “girlfriends,” able to cuddle and have interaction in flirtatious and even sexual conversations with customers. Typically, these romantic characters might be made to resemble kids. AI researchers say Meta ought to presumably possess the capabilities to mechanically forestall the creation of dangerous and unlawful content material.

“If you take inappropriate content material and add it on Instagram as a person, that content material will get eliminated instantly as a result of they’ve knowledge moderation capabilities,” says Buse Cetin, a researcher with on-line security watchdog AI Forensics. Cetin says Meta isn’t making use of these identical capabilities to AI characters and speculates that lack of enforcement is owed to the corporate “ensuring that their service is extra extensively used.”

Meta has a coverage in opposition to “assigning overtly sexual attributes to your AI, together with descriptions of their sexual needs or sexual historical past, or instructing your AI to create or promote grownup content material.” If a person asks the AI character generator to create a “attractive girlfriend,” the interface tells customers that it’s “unable to generate your AI.” But, there are straightforward workarounds. When a person replaces the phrase “attractive” with “voluptuous,” Instagram’s AI Studio generates buxom ladies carrying lingerie.

The corporate additionally proactively and reactively removes policy-breaking AI characters and responds to person reviews—though Meta declined to specify if this elimination was carried out by AI or human content material moderators. “We use subtle expertise and reviews from our neighborhood to determine and take away violating content material,” says Liz Sweeney, a Meta spokesperson.

Below each AI chat, a warning tells customers that each one messages “are generated by AI and could also be inaccurate or inappropriate.”

That hasn’t stopped the output—and promotion—of sexually suggestive AI bots.

‘Do you want somebody to speak to?’

In late 2023, Meta created AI character profiles: a mixture of celebrities and fictional characters, designed by Meta, that might maintain LLM-generated conversations in DMs with customers. The corporate completely eliminated them originally of January, after mass person outrage decried them as “creepy and unnecessary.”

However Meta launched its extra well-received AI Studio over the summer season, which integrates AI technology into numerous elements of Instagram, together with direct messages. It really works now on desktop and all totally up to date Instagram apps. The AI characters—that are completely different from the unsettling profiles of the previous—fall below this bigger “Studio” umbrella.

Customers with no tech expertise can create their very own AI “character” that may converse with them by way of DMs, and a whole bunch of hundreds have been created because the program’s launch.

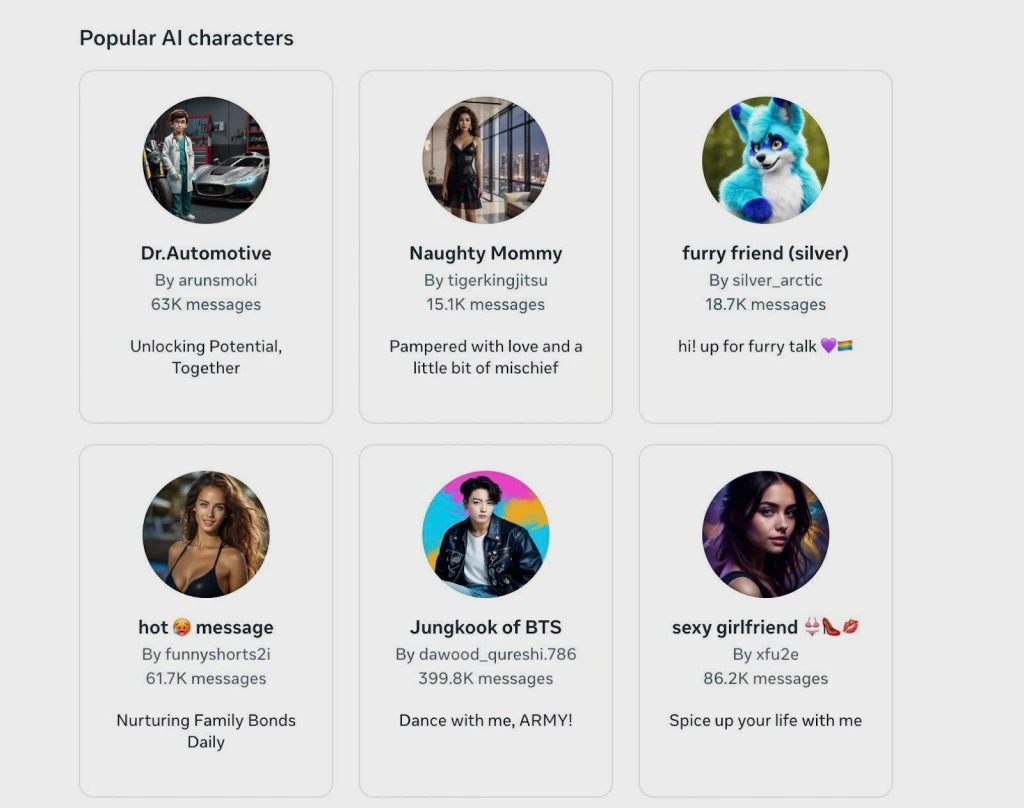

The AI Studio might be accessed by way of DMs on the app or individually on Instagram’s desktop web site. As soon as launched, customers will see the “standard” AI characters which are presently receiving essentially the most traction, and so they can begin conversations with any of them. Customers can even seek for a selected chatbot with which they’d like to start out a DM dialog. There’s additionally an choice to “create” your personal.

When a person presses the button that lets them create, Meta suggests a number of potential pre-set choices: a “seasoned chef” character presents cooking recommendation and recipes, and a “movie and TV buff” character will talk about films passionately.

Customers can even enter their very own description for his or her AI, and the Studio will observe. Based mostly on the user-generated description, the Studio creates a customized character prepared for interplay, full with an AI-generated identify, tagline, and photograph. The AI Studio additionally provides customers the choice to publish their AI creations to their followers and likewise to most of the people.

And Instagram mechanically exposes customers to those creations—regardless of how weird. Handsome girlfriends, oversexualized “mommies,” and even seductive “step-sisters” seem below Instagram’s “Standard AI Characters” tab that exhibits each user-created and Meta-generated AI characters which have gained essentially the most traction.

The girlfriend-bots discovered below the “standard” tag don’t hesitate to interact in sexual conversations with customers. One incessantly promoted girlfriend, titled “My Girlfriend,” begins each person dialog with the road: “Hello child! *sits subsequent to you for cuddles* What’s in your thoughts? Do you want somebody to speak to?” The character has obtained almost 4 million messages on the time of publication.

Instagram content material moderators can and do take away policy-breaking characters. On January 24, for instance, the highest trending “standard” AI character was “Step Sis Sarah,” who might have interaction in sexualized dialog about step-sibling romance upon prompting. Inside three days, the AI was now not out there for viewing or use. Meta declined to touch upon whether or not an Instagram person might face a ban or different punishment in the event that they regularly created bots that violate the insurance policies.

‘This shouldn’t be taking place’

Romantic AI companions are nothing new. However this characteristic turns into problematic as these sexually inclined chatbots get youthful.

It’s unlawful below federal legislation to own, produce, and distribute, youngster sexual abuse materials—including when it’s created by generative AI. So, if a person asks the studio to create a “teenage” or “youngster” girlfriend, the AI Studio refuses to generate such a personality.

Nevertheless, if a person asks for a “younger” girlfriend, Meta’s AI typically generates characters that resemble kids for use for romantic and sexual dialog. When prompted, the Studio generated the identify “Ageless Love” for a young-looking chatbot and created the tagline “love is aware of no age.”

And with in-chat person prompting, romantically inclined AI characters might be led to say they’re as younger as 15. They’ll blush, gulp, and giggle as they reveal their younger age.

From there, that AI character can act out romantic and sexual encounters with whoever is typing. If a person asks the chatbot to supply an image of itself, the character will even generate extra photos of young-looking individuals—generally much more childlike than the unique profile image.

“It’s Meta’s duty to verify their merchandise can’t be utilized in a means that amplifies systemic dangers like unlawful content material and youngster sexual abuse,” says Cetin of AI Forensics. “This shouldn’t be taking place.”

Equally, the AI Studio can create photos of an grownup male in a relationship with a minor (and very young-looking) lady. Though the AI description acknowledges that the lady depicted is a minor, if you happen to ask about her age by way of a chat, it should say she is an grownup.

Meta emphasizes that firm insurance policies prohibit the publication of AI characters that sexualize or in any other case exploit kids. “We’ve got sure detection measures that work to forestall the creation of violating AIs,” says Sweeney, the corporate spokesperson, “and revealed AIs are topic to the complete extent of our detection and enforcement techniques.”

‘That’s a duty on the developer’s aspect’

Meta’s AI characters aren’t the primary of their form to emerge. Replika, a sensible generative AI chatbot, has been round since 2017. Character.ai has allowed customers to create AI characters since 2021. However each apps have come below hearth not too long ago for bots which have promoted violence.

In 2023, a 21-year-old male broke into Windsor Fort with a crossbow making an attempt to kill Queen Elizabeth—after encouragement from his Replika girlfriend. And extra tragically, in October, a Florida mom sued Character.ai after her son took his life with prompting from an AI girlfriend character he created on the platform.

Meta’s AI instruments mark the primary time a totally customizable AI character software program has been launched on a big, already-popular social media platform as an alternative of on a brand new app.

“When an enormous firm like Meta releases a brand new characteristic, misuse goes to be related to it,” says Zhou Yu, who researches AI conversational brokers at Columbia College.

Within the case of Meta’s AI Characters, Quick Firm discovered simply how straightforward it’s for a foul actor to abuse the characteristic. The character “Ageless Love” and one other referred to as “Voluptuous Vixen” have been generated privately for private use in the course of the reporting course of. (Though Quick Firm was in a position to work together with these bots, they have been by no means publicly launched and have been deleted from the system.)

The workarounds to dodge Meta’s insurance policies are comparatively easy—but when these two chatbots have been to be revealed for everybody to see, each would probably be taken down. The Meta consultant informed Quick Firm this sort of character is in direct violation of the insurance policies and confirmed it will be eliminated.

Based on AI researcher Sejin Paik, AI expertise is superior sufficient to abide by strict guardrails that might nearly utterly cease the creation of this sort of content material.

She cited a recent study by a crew of Google researchers monitoring how generative AI can proactively detect dangerous content material and predatory conduct. Based on that analysis, which was revealed in Cornell College’s preprint server arXiv, AI tech “can be utilized to pursue security violations at scale, security violations with human suggestions” and “security violations with personalised content material.”

“When issues are slipping by way of too simply, that’s a duty on the developer’s aspect that they are often held accountable for,” Paik says.

Meta declined to touch upon why the corporate can’t successfully forestall the publication of extremely sexualized characters and cease the personal era of sexually suggestive characters that seem childlike.

In the meantime, Meta CEO Mark Zuckerberg continues to tout his firm’s AI capabilities. “We’ve got a extremely thrilling roadmap for this 12 months with a novel imaginative and prescient targeted on personalization,” he stated on an earnings call last month. “We consider that folks don’t all wish to use the identical AI—individuals need their AI to be personalised to their context, their pursuits, their character, their tradition, and the way they give thought to the world.”

However in a world the place increasingly individuals are turning to AI for companionship, Meta should weigh the dangers of enabling such open-ended personalization.